Its program consists of a one-hour lecture followed by a discussion. The lecture is based on an (internationally) exceptional or remarkable achievement of the lecturer, presented in a way which is comprehensible and interesting to a broad computer science community. The lectures are in English.

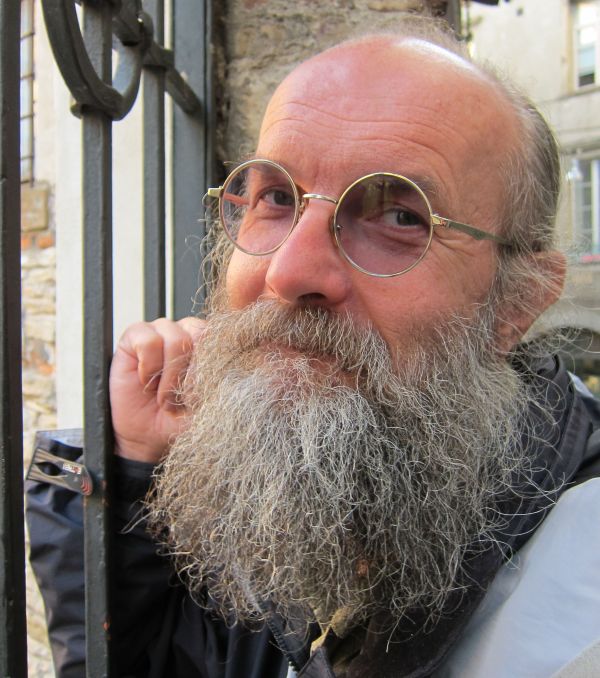

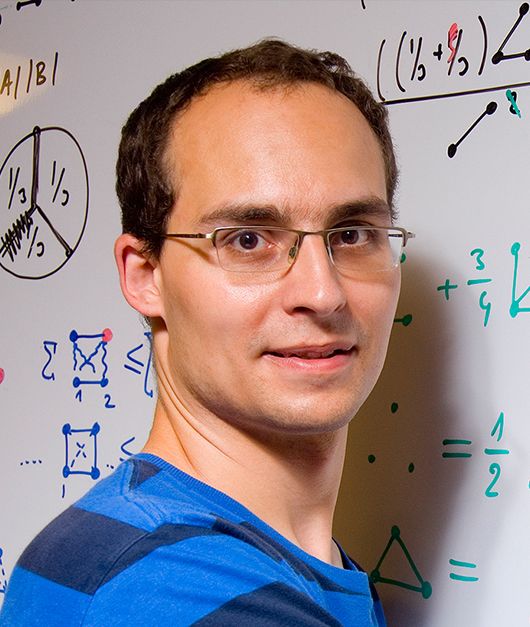

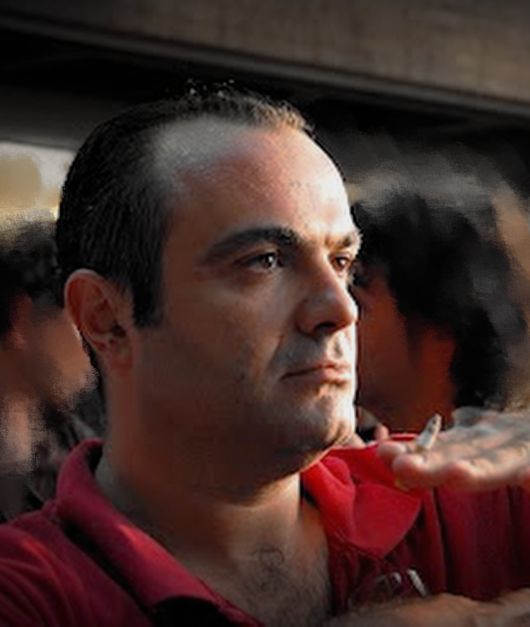

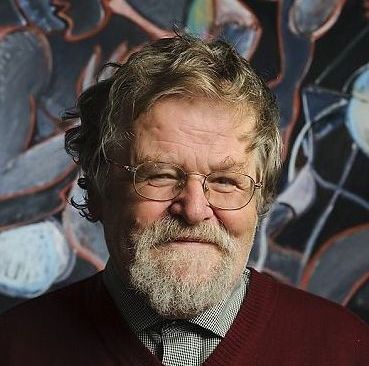

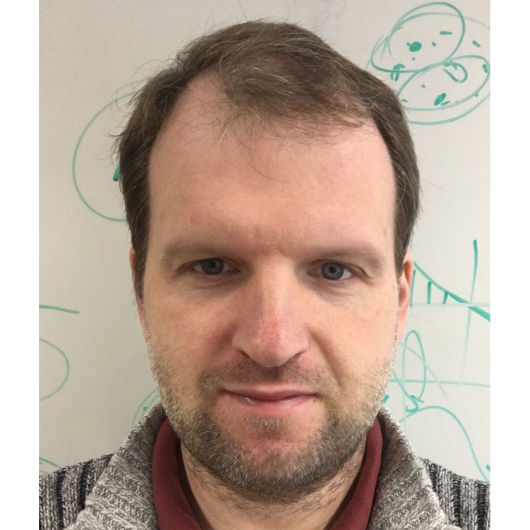

The seminar is organized by the organizational committee consisting of Roman Barták (Charles University, Faculty of Mathematics and Physics), Jaroslav Hlinka (Czech Academy of Sciences, Computer Science Institute), Michal Chytil, Pavel Kordík (CTU in Prague, Faculty of Information Technologies), Michal Koucký (Charles University, Faculty of Mathematics and Physics), Jan Kybic (CTU in Prague, Faculty of Electrical Engineering), Michal Pěchouček (CTU in Prague, Faculty of Electrical Engineering), Jiří Sgall (Charles University, Faculty of Mathematics and Physics), Vojtěch Svátek (University of Economics, Faculty of Informatics and Statistics), Michal Šorel (Czech Academy of Sciences, Institute of Information Theory and Automation), Tomáš Werner (CTU in Prague, Faculty of Electrical Engineering), and Filip Železný (CTU in Prague, Faculty of Electrical Engineering)

The idea to organize this seminar emerged in discussions of the representatives of several research institutes on how to avoid the undesired fragmentation of the Czech computer science community.