Čtyřicátédeváté setkání Pražského informatického semináře

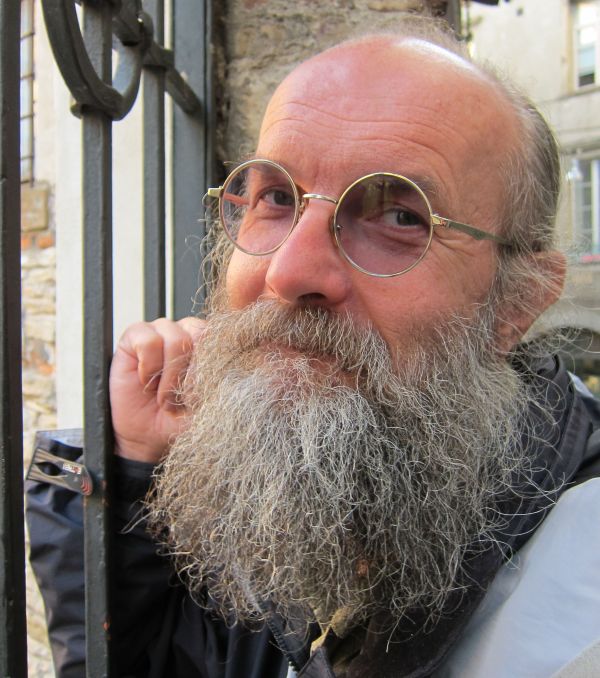

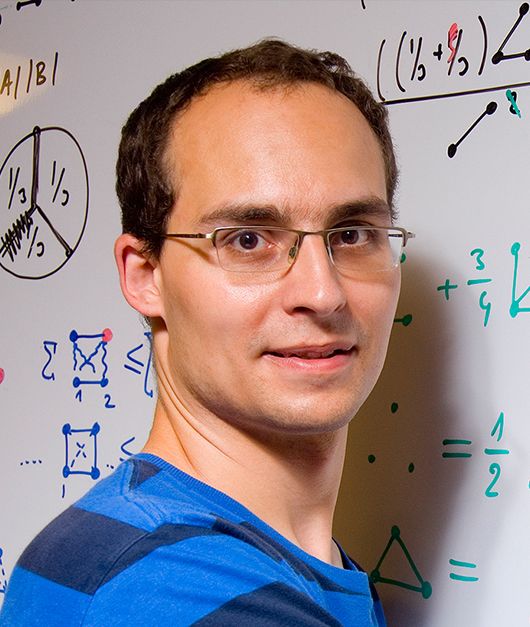

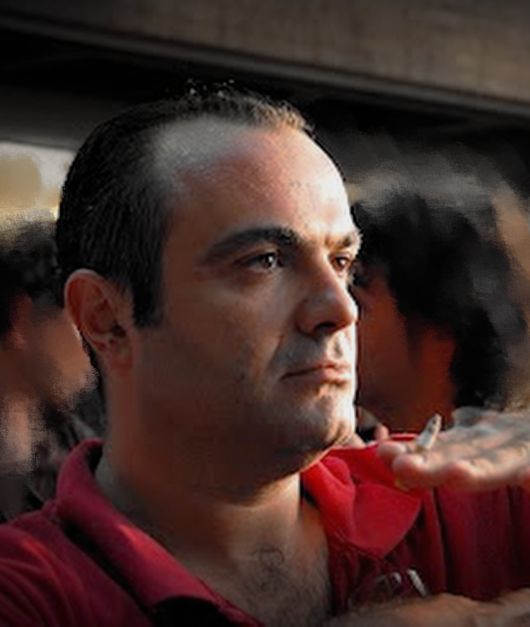

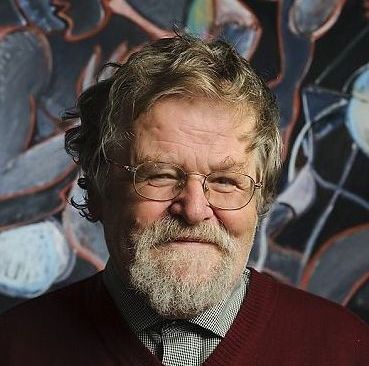

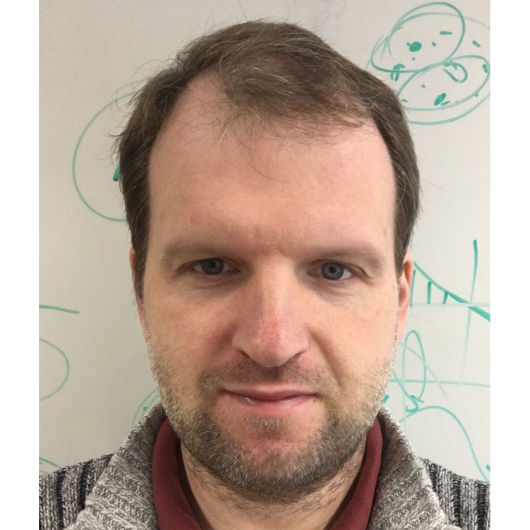

Richard Sutton

The Alberta Plan for AI Research

Sutton present a strategic research plan based on the premise that a genuine understanding of intelligence is imminent and—when it is achieved—will be the greatest scientific prize in human history. To contribute to this achievement and share in its glory will require laser-like focus on its essential challenges; identifying those, however provisionally, is the objective of the Alberta Plan for AI research.